Robodebt: When automation fails

Lessons from Australia's use of algorithms to detect welfare fraud become more relevant in the age of AI

From 2016 to 2020, the Australian government operated an automated debt assessment and recovery system, known as “Robodebt,” to recover fraudulent or overpaid welfare benefits. The goal was to save $4.77 billion through debt recovery and reduced public service costs. However, the algorithm and policies at the heart of Robodebt caused wildly inaccurate assessments, and administrative burdens that disproportionately impacted those with the least resources. After a federal court ruled the policy unlawful, the government was forced to terminate Robodebt and agree to a $1.8 billion settlement.

Robodebt is important because it is an example of a costly failure with automation. By automation, I mean the use of data to create digital defaults for decisions. This could involve the use of AI, or it could mean the use of algorithms reading administrative data. Cases like Robodebt serve as canaries in the coalmine for policymakers interested in using AI or algorithms as an means to downsize public services on the hazy notion that automation will pick up the slack. But I think they are missing the very real risks involved.

To be clear, the lesson is not “all automation is bad.” Indeed, it offer real benefits in potentially reducing administrative costs and hassles and increasing access to public services (e.g. the use of automated or “ex parte” renewals for Medicaid, for example, which Republicans are considering limiting in their new budget bill). It is this promise that makes automation so attractive to policymakers. But it is also the case that automation can be used to deny access to services, and to put people into digital cages that are burdensome to escape from. This is why we need to learn from cases where it has been deployed.

The experience of Robodebt underlines the dangers of using citizens as lab rats to adopt AI on a broad scale before it is has been proven to work. Alongside the parallel collapse of the Dutch government childcare system, Robodebt provides an extraordinarily rich text to understand how automated decision processes can go wrong.

I recently wrote about Robodebt (with co-authors Morten Hybschmann, Kathryn Gimborys, Scott Loudin, Will McClellan), both in the journal of Perspectives on Public Management and Governance and as a teaching case study at the Better Government Lab.

Fraud Prevention as a Blind Spot

In 2013, Prime Minister Tony Abbott of the Liberal Party led a new conservative government to power. He pledged to streamline the civil service and cut spending by recovering fraudulent or overpaid welfare benefits—or, in the words of the Minister for Social Services and future Prime Minister, Scott Morrison, to become a “strong welfare cop.”

To reduce costs, the government focused program design on data matching, automation, the use of third-party debt collection agencies. Automation and outsourcing were expected to enable the government to investigate and recover overpayments on a much greater scale than was possible with individual civil servants.

By the time the program was scrapped, nearly 381,000 Australians received compensation or had their debts reduced to zero. Many members of the public felt as if they were being accused of fraud. Government statements and media coverage fed this confusion, conflating overpayments on the part of the government with deliberate fraud by individuals. Beliefs about a punitive search for fraud were fed by headlines like:

Human Service Minister Alan Tudge said on TV: “We will find you, we will track you down, and you will have to repay those debts, and you may end up in prison.”

The invocation of “integrity” and “fraud prevention” is a frequent claim that justifies burdens, even in contexts where fraud is minimal and fraud prevention efforts are minimally effective. For example, DOGE has frequently invoked fake “fraud” statistics as a justification to attack programs like Social Security, and “fraud” has become a justification by Republicans to introduce administrative burdens that will serve as de facto cuts to programs like Medicaid.

The pursuit of fraud reflected a clawback logic with the Robodebt case. Think of clawback logic as a particular type of institutional logic: the imperative to demand the return of resources the state believes have been given incorrectly to citizens. Once this logic defines the purpose of automation at the expense of other values, things will go badly wrong.

Robodebt caused many vulnerable Australians—those receiving benefits or pensions for years, disability, or experiencing chronic underemployment—were sent notices that they owed thousands of dollars. Some took out loans, maxxed out credit cards, or sold their cars to meet their debt obligations. At one point, the government even threatened to restrict the movement of “welfare debt dodgers” with international travel bans. The resulting psychological costs included stress, trauma, depression, suicidal ideation, and in at least two cases, were associated with suicide.

Robodebt used administrative data to identify potential welfare overpayments. At the heart of the Robodebt algorithm was a faulty assumption: that people’s income was stable. (Current US proposals for work reporting requirements in Medicaid make the same faulty assumption). The assumption was reasonable for those with salaried jobs, but unreliable for welfare recipients working changing or sporadic hours.

Bad Assumptions = Bad Outcomes

The Robodebt scheme incorporated a second faulty assumption that compounded the first: default judgments made in the absence of a response from the welfare recipient were interpreted as acceptance of the assumed overpayment, as confirmation of the algorithm’s accuracy. The burden of proof was placed on clients, while the system did not explain how debts were calculated or when the supposed overpayment occurred. Furthermore, the scheme made contesting overpayments nearly impossible. To cut costs, all interfaces were put online, excluding recipients with no internet access or low digital literacy.

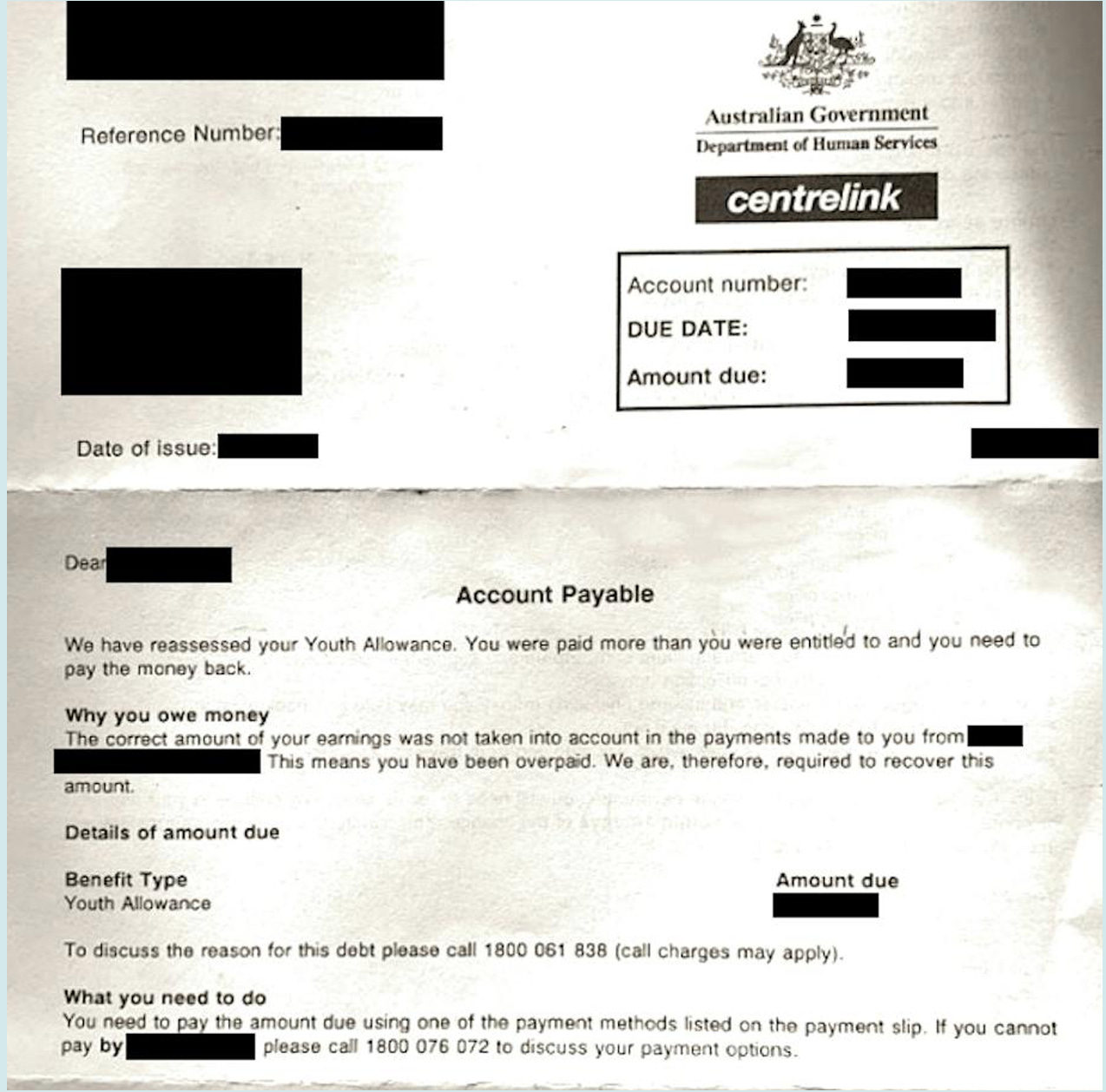

Individuals flagged by the Robodebt system as having received overpayments were sent a letter to the address listed in the agency database. After receiving the letter, they were required to visit a website to respond to the claim. If they failed to respond after several notices, they forfeited their opportunity to contest the charge. Consequently, the overpayment was registered as debt, any tax refund garnished, and the remaining debt sent to private debt collectors.

For people who did receive the notice, the information lacked details about their case. They were told they had received welfare overpayments and were required to repay by a certain date. Many without access to, or confidence in, technology were unable to contest. Recipients were not told extensions were available. No contact number was provided for recipients to call and ask for details, nor did it state the period of alleged overpayments, the information needed to verify their income, or the next steps.

When individuals were directed to the website, they found a document in legalistic language reiterating the notice letter and providing more information about the policy. They were asked to scroll through the document on the browser and click “Next” at the bottom of the screen for additional steps. Many clicked “Next” without carefully reading the entire document, eager to learn what options they might have for contesting the charge. However, at the end of the screen was text explaining that by clicking “Next” the person admitted to the overpayment and consented to a repayment plan. Those who were confused, anxious, or did not understand the agreement and clicked “Next” were unable to contest.

In other words, people who examined online explanations in the way that people typically do—to quickly skim and move on— inadvertently admitted guilt.

Missed Warning Signs

The problems of Robodebt were identified almost immediately. Welfare recipients detailed the enormous burdens associated with the program to legal aid advocates, journalists, and on social media. But Robodebt would remain in place for years. Policymakers will sometimes stick with a failed system rather that admit it’s flaws — and by extension, their responsibility.

As early as December 2016, the Australian Council of Social Services asked the government to suspend the program due to gross inaccuracies, lack of transparency, and emotional distress. Yet, the program stayed in place until 2020, even after an investigation by the Australian Commonwealth Ombudsman, two Australian Senate inquiries, and a series of Administrative Appeal Tribunal cases questioning the scheme’s lawfulness.

Front-line benefits staff were either not consulted or their feedback was ignored. Department of Human Services compliance officers flagged problems with the program in early 2016 verbally and in writing, or via whistleblowing processes, informing the national media that debts were being imposed without proof. These critiques were dismissed by agency leadership.

The program’s success was measured by gross savings, not efficiency or accuracy. The savings target reflected Abbott and Morrison’s goal of using technology to reclaim money. Senior public servants were acutely aware of the desire for savings, which became de facto targets. The pressure to “just get it done” led them to set aside concerns about problems.

Burdens Under Clawback Logics

The clawback logic of the Robodebt case is not an outlier, but can occur whenever a government is seeking to reclaim resources from individuals. Governments have extraordinary powers over how they establish and collect overpayments and debts, with tools such as limiting future benefit payments, taxing refunds, garnishing wages, or referring debts to private collectors.

The use of clawback logics illustrates how administrative burdens reinforce inequality, targeting welfare users who already have limited resources and then imposing onerous demands upon populations who may struggle to deal with them. The Royal Commission Report on Robodebt noted that the population of former welfare recipients was likely to contain many who were vulnerable “due to physical or mental ill health, financial distress, homelessness, family and domestic violence, or other forms of trauma” and provisions to offer support for those groups was “hopelessly inadequate.”

The burdens that citizens faced were extraordinary. But it is also worth noting that another group experienced burdens with the Robodebt case. These were public service workers who saw and understood that the system was broken, but were pushed to enforce it regardless. One caseworker described:

doing myself damage by continuing to work within an unfair system of oppression that I thought was designed to get people rather than support them, which was continuing to injure people every day, and which transgressed my own moral and ethical values.

Keeping Humans in the Loop

It is perfectly fine to be excited about AI and other mechanisms to automate public service delivery. But that cannot mean abandoning humans. Robodebt could have been prevented from failing had policymakers listened to warnings. A best practice principle for both the use of algorithms and AI systems is to keep humans in the loop to warn of automation failures.

The Robodebt case shows voices—of senior public employees, caseworkers, clients, and advocacy groups—being ignored, discouraged, or overlooked. Feedback loops to warn of system failure were disabled or downplayed.

While it might have seemed easier for public servants in a politicized environment not to share bad news that they knew their political superiors did not want to hear, they would ultimately be punished for doing so. After the scandal could not be ignored senior public servants were reprimanded and referred to disciplinary action by the Australian Public Service Commission for failing to incorporate information about the risks of the scheme to their political superiors.

The temptation to exclude human feedback is stronger in automated systems, whose attraction for policymakers is, in part, to save money by not relying on public employees. But removal of voice reduces the chance possibility for incremental adaptation and correction, leading to a system collapse at great financial and political cost for the government, and personal cost for the clients affected.

Lessons for Governments

What are the other lessons for government here? I have taught the Robodebt case a couple of times with graduate students in public policy, and here is the list we came up with.

Establish legal basis for project

Understand the values you are trying to maximize (e.g. fraud reduction), balance them with other important values (e.g. access, due process), and have credible measures to track progress

Politicians should demand, and civil servants provide, clear briefing on political risks of automation failure, and update those briefs based on implementation data

Maintain feedback loops that can provide an early warning system—from employees, clients, advocates, media

Pilot the automation system if possible; if not, have rigorous early assessment and willingness to adjust

Don’t put impossible burdens of proof on citizens

The default, automated position should not be punitive—automatic decisions to impose losses should generate secondary review, and offers of clear appeals process

Let me acknowledge a potential irony here: I am asking for more process to reduce administrative burdens. Why should politicians listen? In the case of Robodebt, the scandal became a political anchor on the incumbent party, and the equivalent child benefits algorithm problems in the Netherlands brought down the government. The public really, really does not like governments using automated systems to punish them.

This is a big and important point so I am going to put in big, bold letters:

POLITICIANS UNDERESTIMATE THE POLITICAL RISKS OF AUTOMATION FAILURE.

Equating overpayments with fraud is an insidious misnomer. In the SNAP (Food Stamp) world there are three types of over and underpayment errors, administrative error, inadvertent client error and intentional program violation. As you can se from the names, only one could be considered fraud.

If it is an administrative error (quite common), the client is still responsible for repaying 100% of the overpayment under Federal law. As the eligibility system degrades, paperwork falls through the cracks by rushed staff, and in the end the client is responsible for the error. Is that fair? What if the error is thousands of dollars? All very common from what I saw.

How are we helping people become economically independent if we suddenly saddle them with huge debts.